| Version 33 (modified by , 13 years ago) ( diff ) |

|---|

PostGIS Raster SoC Idea 2012 - Distance Analysis Tools for PostGIS Raster

Mentor: Pierre Racine

Idea:

The idea for this proposed project is to add two spatial analysis functions to PostGIS Raster that

implement two main ways of performing distance analysis: Euclidean distance calculation and

cost-weighted distance calculation.

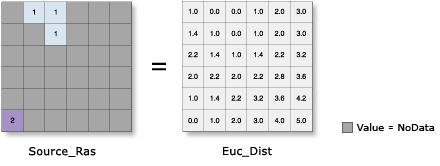

Euclidean distance function will create a distance surface representing the Euclidean distance from each cell in the source layer to the starting point or the closest source (as designated by user). The basic concepts of the algorithm would be using the center of the source cell to calculate the distance from it to the rest cells in the raster layer or within the user-defined extent.

Cost-weighted distance will create a raster layer representing the accumulative cost distance for each cell to the starting point or the closest source (as designated by user). A cost raster layer will be generated using one or several factor layers representing the impedance of passing through each cell according to user’s criteria. User can also put weights on the input factor layers to represent different levels of importance for those factors. Factors such as: elevation, slope, orientation, land use / land cover type; vehicle speed, accessibility; and a binary map layer could also be used as a mask for defining geographic extent or as a filter for the output cost layer. The accumulative cost values will be then assigned to each cell representing the cost per unit distance for moving through this cell.

GSoC 2012 Proposal:

http://google-melange.appspot.com/gsoc/proposal/review/google/gsoc2012/kya_na/1

Weekly Reports

- Week 1 Report (May 21st - May 25th)

- Week 2 Report (May 28th - June 1st)

- Week 3 Report (June 4th - June 8th)

- Week 4 Report (June 11th - June 15th)

Week 1 Report

What did you get done this week?

- Compiled PostGIS 2.0 sucessfully.

- Loaded raster data into PostgreSQL and practiced some query by finishing the raster/vector tutorial.

- Wrote an analysis of how Euclidean distance and Cost-weighted distance are computed in ArcGIS and GRASS.

- Setup wiki page.

What do you plan on doing next week?

- Learn more about PostGIS Raster concepts and functions

- Write an analysis of how Euclidean distance and Cost-weighted distance are computed in Oracle

- Start to write a proposal on how to adopt the concepts and algorithms in PostGIS.

Are you blocked on anything?

- As of now I was not blocked on anything yet, but working in a spatial database is something new and challenging to me. I will need to read and reserch more about it.

- However, it took me some time to understand how raster coverage is stored in PostGIS, and how Raster type works.

Analysis Report

How Euclidean distance and Cost-weighted distance are computed in ArcGIS and GRASS

- Euclidean Distance

Week 2 Report

What did you get done this week?

- Read Documents and Pierre Racine's presentations on PostGIS Raster; Learned more about the raster data storage and manipulation in PostGIS

- Read Oracle Spatial Documents on GeoRaster; Learned about raster data storage in Oracle.

What do you plan on doing next week?

- Learn more about PostGIS Raster development

- Write a comparison of raster data storage and manipulation in PostgreSQL and Oracle

- Write a proposal on how to adopt the concepts and algorithms of distance calculation in PostGIS.

Are you blocked on anything?

- It seems that Oracle Sptial dosenot provide distance analysis functions for GeoRaster data. Please let me know if I missed it.

However, by reading documents of GeoRaster, I got a better understanding of the raster data storage in spatial database. So I feel it would be helpful for me to write an analysis to compare the concepts of raster data storage and manipulation with PostGIS Raster and Oracle Spatial GeoRaster.

Comment from Mentor:

I really think we want to avoid having to produce an intermediate raster of sources. PostGIS is strong on raster/vector interaction and I really don't see why someone would prefer to provide sources as raster. The sources should come from a table of point. Now this raise a certain number of issues:

-What if the table contains more than one point?

-What if some of those points are outside the raster extent?

-Another issue is: What if the raster I want to produce does not fit into the PostgreSQL maximum field size?

These are the kind or difficulties one encounters when working in a database.

Think about large tiled coverage, Delaunay triangulation, aggregate functions and all the combinatory of "small/very large number of point" and "small/very large raster coverage". Ideally we want to provide a solution working for every limit cases.

Week 3 Report

What did you get done this week?

- Wrote a comparison of raster data storage and manipulation in PostgreSQL and Oracle

- Wrote a proposal on how to adopt the concepts and algorithms of Euclidean distance calculation in PostGIS.

What do you plan on doing next week?

- Write a proposal on how to adopt the concepts and algorithms of Cost-weighted distance calculation in PostGIS

Are you blocked on anything?

- Based on the feed back I got from Pierre last week, I agreed that we want to avoid having to produce an intermediate raster of the source point data. Since PostGIS Raster provide the capability of seamless vector-raster interactions, it is preferable that we expect the input source point data to be a vector layer, which is stored in PostgreSQL as a table of points with geomegry. The concepts of the distance calculation in ArcGIS and GRASS were all based on raster source data (ArcGIS will first rasterize the vector layer if necessary). So I was stucked at this point on how to avoid generating this intermediate raster layer if we want the source data to be vector points.

My Analysis Reports

Comment from Mentor:

- "Algorythm: a. Make an empty raster new_rast, using the same extent as the input vector point data source_point, set pixel value to NoData value." I don't think this is a good idea as you would have no control on the alignment of the raster produced. I want to be able to control the dimensions and how the raster is georeferenced. I think your best friend here is ST_AsRaster() which allows passing a geometry and some raster properties and burns the geometry in the raster. Basically ST_AsDistanceRaster() should not be so different from ST_AsRaster(), the only difference is that instead of burning the geometry in the raster, we compute a distance to the geometry for each pixel. It is very possible in this case that the geometry will be outside the extent of the requester raster. Should we want this to work for lines and polygons?

-You should avoid to do two passes to compute the final pixel values: one to compute the distance in pixel/cell and another to convert into the geographical distance.

-What do ArcGIS and GRASS do when there is more than one point? We need a more detailed approach for this case.

-I want also a kind of approach to the problem of billions of points. Does the knn indexing help us in quickly identifying what is the nearest point to the pixel without rescanning all the point for every pixels.

-In general I don't think just copying what ArcGIS or GRASS used to do is what we want. We want a true raster/vector integrated approach, scalable to billions of points, enabling the production of very large tiled rasters. I know this is a much more difficult problem but we want to end up with a variety of easy to use tools. I think this would be a much greater addition to PostGIS than just converting one raster to another raster. Think about aggregate functions or passing a geometry table name to the function. ST_AsRaster was done to work on only one geometry but how could it work to burn many geometries into one raster? Look at ST_UnionToRaster in the PostGIS raster working specifications. (http://trac.osgeo.org/postgis/wiki/WKTRaster/SpecificationWorking03)

-Look also at this mail: http://postgis.refractions.net/pipermail/postgis-users/2012-April/033875.html it might be a general solution working on small and big raster but might be slower than a specific solution. We could simply implement a bunch of custom function in C...

-Start thinking about the signature of some prototype SQL functions. Think about passing the name of a point table

-I think the constraints should be explicited first in your document so we know in which context we want all this to work.

I think the solution to this multipoint problem is the key to the algorithm we want to implement. There would be only one point, there would be no problem. The problem is: How do I know which distance to compute (to which point) for each pixel while I iterate on them. What if the number of point is greater than the number of pixel? Should we iterate on the point in this case instead of iterating on the pixels?

The point here is what to scan first and how many times? Do they rescan the whole raster for every points? That's ok if you have a low number of points but what if you have millions?

I think with the knn index already built you could scan pixels only once assigning the distance to the closest point. and that would be fast even if there are millions. Is this correct?

>> From: Pierre

>> I want also a kind of approach to the problem of billions of points. Does

>>the knn indexing help us in quickly identifying what is the nearest point to the

>>pixel without rescanning all the point for every pixels.

> From: Qing

> Yes, KNN index will definitely help us to identify the nearest point to the pixel.

> With the capability of PostGIS 2.0 to return sorted results from a GiST index, we

> can have the results ordered by the geometry distance between pixel centroid

> and identify the shortest one without having to rescan all the points. However,

> the "order by distance between bounding box centroid" works now for

> geometries. When it comes to point data, the bounding boxes are equivalent to

> the points themselves. So the same would apply to the center of pixel in our

> calculation. We now have a GiST index over the raster column. However, I am

> not sure about how we build spatial index embedded in the pixels or the raster

> geometry.

Not sure I understand well. Could converting pixel centroids into points and computing the knn index on the mix of points and centroids converted to points be an avenue?

I think, considering all the constraints, that we might have to end up with different approaches depending on different constraints. A simple one if some constraints are involved, a more complex one if more constraints are involved.

So in your analyses you have to put into relationship: constraints, features (like the possibility to define a limited distance or the possibility to work with line and polygons), possibilities (limits of the data structure imposed by the DB like the fact that all the geometries are on a different row), scalability, flexibility (we want a generic solution to implement other kind of distances) and performance.

Week 4 Report

What did you get done this week?

- Revised proposal for creating distance surface based on the comments and discussions with Pierre.

What do you plan on doing next week?

- Revise the proposal from last week according to the feedback from the mentor

- Write more detailed approaches according to different constraints

- Transfer all the Google docs documents to the wiki page

Are you blocked on anything?

- Pierre's comments and feedbacks are very helpful in guiding me through the problems I've encountered.